On December 31st, 1999, I rallied my best Beanie Babies and prepared for death. Although skeptical of the hype, I gathered my possessions like a pharaoh preparing for the afterlife, just in case. My theatrics were in vain, and the world entered the new millennium on a mercifully anticlimactic note. When the sky didn’t fall, Y2K fell sharply out of vogue, and the tidal wave of novelty items which bore the brand were relegated to the bargain bins of history.

The origins of celebrating New Year’s Eve on December 31st are inherited from the Roman Empire, aligned with the Julian calendar, and a tribute to Janus, the god of transitions. A viral meme in 2023 advanced a theory that men think constantly about the Roman Empire. This fleeting phenomenon relies on a fabricated version of history designed to bolster traditional Western masculinity, an identity whose present and future status is in jeopardy. The meme not only implies that men and women exist in separate psychic silos but also stresses how our envisioned future is shaped by a fluid interpretation of the past. Janus is two-faced, able to look backwards and forwards simultaneously, a gaze which has determined how the occasion is observed. Y2K was NYE on steroids, with all the same ingredients but cataclysmically heightened stakes. In the end, it was an empty threat, tragically mischaracterizing the looming menace in the 21st century.

In 2023, Y2K was a prolific hashtag on Depop, and the era’s aesthetics of repurposed psychedelia (now two cycles removed from their origins in the 1960s) loom large in pop culture. Y2K, more than a tech scare, ignited apocalyptic fears and survivalist fervour. It split public opinion, between doomsday believers and skeptics. This was a divided reality, both familiar and amplified in 2023, seven years deep into the post-truth era and on the cusp of a transformative age of AI.

Belief was a contentious thing to hold in 2023. While credible proof may be experiencing a flop era, leaps of faith are having a moment.

In 2023, there was nostalgia for bucket hats and for a time of manageable technological (non) crisis. AI has emerged at the forefront of public consciousness following the spitfire rollout of generative models like ChatGPT and Stable Diffusion, forcing the world to take it seriously. A concomitant chorus of AI anxiety has also arisen. The art world is no stranger to precarity, however, only recently have creative workers become the champions of this particular cautionary refrain, recognizing the threat to our livelihoods this technology presents. Alongside these concerns, the ability of these programs to generate convincing falsehoods contributes to a volatile climate of misinformation and distrust that has been spreading like wildfire since the pandemic.

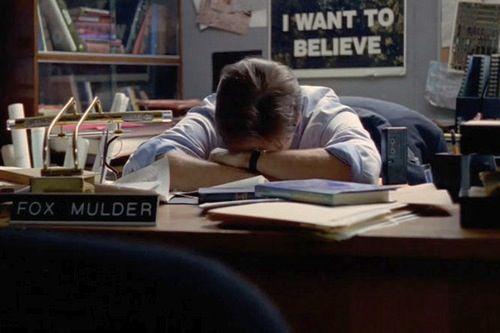

During the lockdown, I rewatch the rewatchable seasons of X-Files, which portrays conspiracy theory from an exponentially more flattering angle than I witness today. In their essay-chapbook “Fear Indexing the X-Files” artist Steven Warwick and theorist Nora Khan claim that the “conspiracy theory serves as a buffer against reality” providing a “play zone” to “try on a skeptical mindset.”1 In today’s climate, there’s nothing punk about being conspiracy-curious, and even a superficial flirtation with the notion feels like a signal of approval toward a hate-filled discourse. I consider these questionable optics every time I take a Zoom call, aware of my poster which reads “I Want to Believe” (a copy of Fox Mulder’s office decor and personal mantra) looming in the backdrop.

Film still of Fox Mulder's "I WANT TO BELIEVE" poster in X-Files, 1993-2002 American television series created by Chris Carter.

At the onset of this current fraught era of hyper-politicization, polarization, and fluid reality, there were predictions that it would spark a politically charged artistic renaissance—it didn’t. Rather than embodying a spirit of resistance, the art of this period often erred on the side of priggishness and lacked any real-world stakes. Addressing any zeitgeist, whether political, cultural, or technological, comes with risks, and as I write this year-end review, I am aware that I am courting this risk more so than I typically would.

Occasionally, the risk pays off, as is the case with Canadian author Naomi Klein's latest book, Doppelganger (2023), which ambitiously delves into the world of online misinformation. Tapping into the abundant metaphor of the double, Doppelganger was spurred by Klein's indignation at being perpetually mistaken for Naomi Wolf, the author of The Beauty Myth (1990), who, more recently, has become an unlikely ally of the alt-right's crusade against public health measures and vaccines. Klein characterizes the unhinged digital domain Wolf inhabits as the "mirror world," expressing unease at its eerily familiar polemics. She is troubled by the distorted populist rhetoric of figures like Steve Bannon, a right-wing media figure and former advisor to Trump. What particularly disturbs Klein is Bannon’s proclivity to remix leftist talking points into what she refers to as "fact-free clout-chasing remixes of The Shock Doctrine."2

Accompanying Klein's book is a trailer created by artist Colby Richardson. The trailer opens on an iPad playing a video of Klein introducing herself and her predicament. As it progresses, we’re shown a rapid sequence of content including images which possess the uncanny-valley watermark of experiments in AI, and the climactic clip from The Parent Trap when Lindsay Lohan comes face to face with her double. Throughout the video, shots gradually reveal the presence of yet another embedded screen, as Klein's voice narrates: "When I look at the mirror world, I don't see disagreements over a shared reality; I see disagreements about what is real and what is a simulation." AI exacerbates an already unstable reality, by flooding our feeds with content that may be entirely fabricated. The severe implications of the deepfake era bring me back to another timeless neologism from the X-Files, the yin to “I want to believe’s” yang— “Trust no one.”

Despite the potential for social damage, deceit is not the sum of AI’s potential uses. 2023 has witnessed a renewed wave of AI art-making. There is warranted skepticism of this trend, both because novelty is a quality with diminishing returns and because many of the alarm bells that have been raised have not been adequately assuaged. Klein names AI as a threat to our already fractured sense of reality but is sparse on exposition. I wonder if this is because generative AI has evolved so rapidly it presents a challenge to capture in print, a formally conservative medium, or if it's just not her wheelhouse. Echoing Klein’s concerns, artist Trevor Paglen recently coined the term “psy-op capitalism” as the successor to surveillance capitalism, describing a new era of insidious influence and control projected through digital channels and splintered consensus reality.

Belief was a contentious thing to hold in 2023. While credible proof may be experiencing a flop era, leaps of faith are having a moment. Between the questionably sincere turn towards religiosity amongst the extremely online and the trend of New Age influence, it seems apparent that we all want to believe. At the centre of the messy Venn diagram between conspiracy, esoteric belief, and AI is the work of artist Nina Hartmann, whose recent show Soft Power at Silke Lindner features the artist’s encaustic and resin sculptures. Hartmann utilizes AI to manipulate photographs sourced from government materials and online UFO forums, integrating these obscured images into post-truth sigils that evoke paranoia and spirituality. Soft Power operates under a veil of moral ambiguity that for a period in recent memory felt off-limits. There’s a striking timeliness of this body of work that, to me, advances the idea that as metanarratives deteriorate we are more willing to embrace the metaphysical ones.

Few figures are as synonymous with the new wave of AI as artists/musicians Holly Herndon and Mat Dryhurst. For several years, the virtually attuned duo have been developing Holly+, a digital doppelganger and “vocal deepfake” trained on Herndon’s own voice.3 I thought about Holly+ while reading Doppelganger. The two projects share common ground, both fundamentally concerned with doubles, and how identity operates in online spaces. It’s tempting to cast the two projects as opposites in the enduring poles of technophobia and techno determinism—Klein’s doppelganger crisis symbolizes the collective downward spiral of truth, whereas Holly+ delivers Dolly Parton covers—however, the two projects complement one another. Both anticipate a situation in which AI accelerates problems endemic to the information age such as intellectual property, public trust, and misinformation, and both strike a delicate balance between introspection and analysis. Herndon and Dryhurst have used their experiments in AI to ignite a discussion about how to best preempt the impact on creative fields by creating a layer of consent around data scraping. In Herndon’s words, “How do you build a new economy around this where people aren’t totally fucked?”4

Like a 9-year-old embracing her death at the cusp of the millennium, articles discussing the potential consequences of AI have a flair for the dramatic. This tracks with past discourse around technology which has tended to veer either utopian or alarmist. This past March, in an opinion piece published by The New York Times, the authors use vivid imagery of plane crashes and Terminators to paint a bleak future. Coincidentally, the same week the article is released, I am forced to face my own vulnerability when I share an image of the pope in a Balenciaga-esque coat that turns out to be an AI-generated fake. Later, I will sheepishly backtrack and try to justify my actions by claiming that the image is culturally significant, regardless of its authenticity. While the stakes of this deception are relatively low, the thought of being fooled by an AI-generated image is a chilling omen to those, like me, who believed they were immune.

Balenciaga Pope, an AI-generated image originating from Jake Flores. Source via

As I come to terms with my own delusions of invulnerability to fake news, shattered by a well-dressed pope, I wonder how ego has influenced the response of the creative community towards AI. Parallel to the fear of being replaced, there is a sense of diminishing exceptionality. Back in the early days of post-internet art, American artist Cory Arcangel addressed a similar insecurity, referring to it as “fourteen-year-old Finnish-kid syndrome.” This condition is characterized by a semi-optimistic resignation that as the Web widens the sightline of what art is visible, there will always be an eighth grader across the world making work better than his own.5

In 1990, in the pages of Artforum, philosopher Vilém Flusser (sort of) predicted the AI pope. In an article simply titled “Popes,” Flusser imagines an artificial intelligence capable of translating images and concepts into legible language and vice versa. The papal paradigm is articulated as one that translates between disparate realms, heaven and hell, image and text. This divine brand of diplomacy is applied to a theoretical artificial intelligence, Wittgenstein, bridges, and art critics. Preempting the crisis of ego which would befall the art world, Flusser assures his milieu that the logic of cultural codes are too knotty to function without a spiritual guide to act as a decoder.

Artificially intelligent popes can only build bridges in the gray zones where universes overlap, not where they are separated by abysses…It is this sacred, meaningless, absurd abyss to which pontiffs and art critics attempt to give meaning. Now that artificial intelligences seem to do precisely that, but cannot, pontiffs are needed more than ever.6

Today, AI has mastered legibility, essentially automating artless writing tasks like work emails and cover letters. Reflecting this teleological trek towards neutrality is the terminology of “natural language processing,” (NLP) the branch of AI which deals with language comprehension and production. Early experiments with chatbots often gained popularity due to their tendency to produce incoherent rants. However, as anyone who has ever had an unpredictable friend or relative can attest, volatility is only tolerable for so long. As machine learning has moved quickly to perfect a banal and coherent voice, the online aesthetic is moving in the opposite direction, towards illegibility, embracing error and mixing symbols. Transitioning out of 2023 and into 2024, incoherence appears to be the most transgressive move you can make in an online environment haunted by sophisticated NLP models.

Historically speaking, technological breakthroughs, while often sparking existential creative crises, tend to foster new perspectives that highlight, rather than undermine, the human essence in art. The camera didn’t render painting obsolete and the internet didn’t democratize the art world. It was incorrectly presumed that a political crisis would produce better art, and it didn’t pan out, but maybe we’ll fare better with an existential crisis. We all want to believe, even as the conditions to do so become increasingly inhospitable.

Happy New Year.